What is Big-O Notation?

Big-O Notation is a basic tool used by computer scientists to study algorithms. Algorithms are programs that are meant to solve very specific problems in ways that can be measured. Big-O Notation measures the efficiency of these algorithms based on certain factors.

The O refers to the number of magnitudes while n refers to the number of operations or the size of the input.

Why is Big-O Notation important?

Big-O Notation is important in expressing how an algorithm performs. It helps programmers to measure the effectiveness and scalability of an algorithm. This way the programmers can tell if the algorithm is well suited to the problem they’re trying to solve, or the best approach to take in solving the problem. This is also important in terms of the speed of solving the problem and the amount of memory the algorithm consumes. We’ll discuss this in more detail.

How Big-O Notation works

Big-O Notation measures how the algorithm performs based on the number of operations it is executing. This measurement is done using 2 determinants.

- Time complexity

- Space complexity

Time complexity is defined by how long an algorithm takes to run a program based on the number of programs it is executing. It uses ‘n’ to refer to the number of inputs.

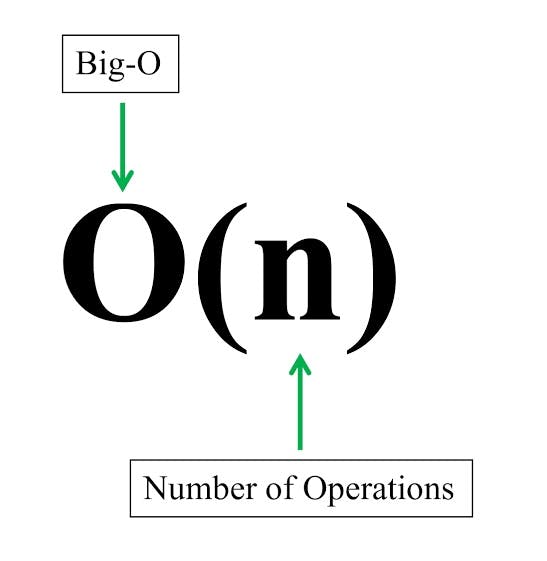

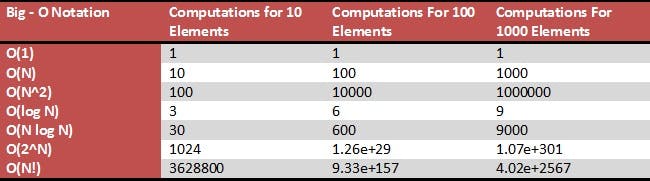

The Big-O complexity charts display the operations and elements. The elements refer to the number of inputs while the operations refer to the number of operations used to run those inputs.

Let’s take a look at some of the time complexities from the chart

We’d consider the most basic ones.

O(1)-Constant Time Complexity

O(1) is referred to as constant time complexity because regardless of the number of inputs the time of execution remains constant. O(1) runs in constant time.

If an array has 10 elements, it will run in the same amount of time if it had an infinite number of elements. O(1) has the fastest running time for any algorithm and that is why it is referred to as an excellent time algorithm.

O(n)-Linear Time Complexity

O(n) runs in linear time. That means as the number of inputs increases, the run time also increases. There is a linear relationship between the number of input(n) and the run time. If an array were to run 100 elements, it will take a longer time than an array that runs 5 elements. An increase in the number of inputs leads to an increase in run time and this is why it is referred to as Linear. Because the run time isn’t constant, O(n) is not a very performant algorithm.

O(log N)-Logarithmic Time Complexity

O(log n) is a very performant algorithm and is also a good time algorithm. If an algorithm uses O(log n), it means as the number of input increases, the number of operations increases very slowly. As the input increases linearly, the operations increase exponentially which is slow. If it takes 3 seconds to compute 10 elements, it will take 6 seconds to compute 100 elements and 9 seconds to compute 1000 elements. Binary search is a good example of logarithmic time complexity.

To determine the performance of an algorithm, the run time is not only to be considered. The memory usage of the program is also to be considered, which is the Space complexity.

Space complexity is the measure of the amount of space used up by an algorithm during its operation. The space here refers to the number of variables the algorithm occupies. Space complexity is determined by 2 factors.

The input size or the amount of storage required for each item.

The implementation of the program is responsible for memory usage.

Space and Time complexity are 2 very integral factors to measure the efficiency of an algorithm. Although, time complexity is a more important factor and determinant in general.